Everyone is obsessed with the idea of “hacks.”

Like many others, I’m passionate about finding solutions that make my life simpler and better.

That’s why I am so excited to share this incredible SEO hack with you today! It is a legitimate technique that you can immediately start using.

Unlock the SEO potential of your website with a simple and effortless technique – this one is often overlooked, but it can make an enormous difference. Implementing this strategy takes no time at all!

The robots.txt file (also known as the robots exclusion protocol or standard).

You may never have heard of it, but a text file is present on each website across the Internet.

It has been developed to work together with search engines, yet surprisingly it is a source of SEO power that can be unlocked.

I’ve observed clients go to extraordinary lengths in an attempt to elevate their SEO. When I advise them that they can just tweak a small text file and get the same result, it almost seems too simple for them to believe.

Despite the complexity of SEO, there are several easy and quick ways to improve it. Applying these methods will help you achieve better results in no time.

With no technical experience required, you too can unlock the power of robots.txt by accessing your website’s source code.

Are you ready to make your robots.txt file a hit with search engines? If so, follow my instructions and I will reveal the precise steps needed.

Understand How Vital the robots.txt file is to Your Website

To begin, let’s explore the importance of the robots.txt file and why it matters.

The robots.txt file, also known as the robots exclusion protocol or standard, is a small text document that provides instructions to web crawlers – commonly search engines – and informs them which pages on your site should be crawled.

Not only does it inform web robots which pages to crawl, but it also outlines those that should be avoided.

Before a search engine visits the targeted page, it will check for instructions in the robots.txt file.

The type of robots.txt files available varies, so let’s take a look at some examples to gain further insight into their structure and composition.

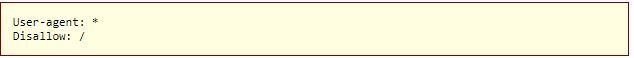

Let’s assume the search engine discovers this example robots.txt file:

If you’re looking to create a robots.txt file, this is the foundation, to begin with.

The asterisk appended to “user-agent” in the robots.txt file indicates that it is meant for all web crawlers accessing the website.

By placing a slash after the word “Disallow,” you’re instructing search engine robots to never access any pages on your website.

You may be curious as to why anyone would want to prohibit web robots from visiting their website.

Ultimately, SEO aims to boost a website’s ranking by making it easier for search engines to crawl the site.

Here comes the key to unlocking this SEO strategy.

Are you aware of how many pages your site contains? If not, go ahead and have a look right now. You never know – it might just surprise you.

When a search engine indexes your website, it will crawl through each and every page – no exceptions.

If your website is comprised of a large number of pages, it could take the search engine bot an extended period to crawl them – this can have negative impacts on your ranking.

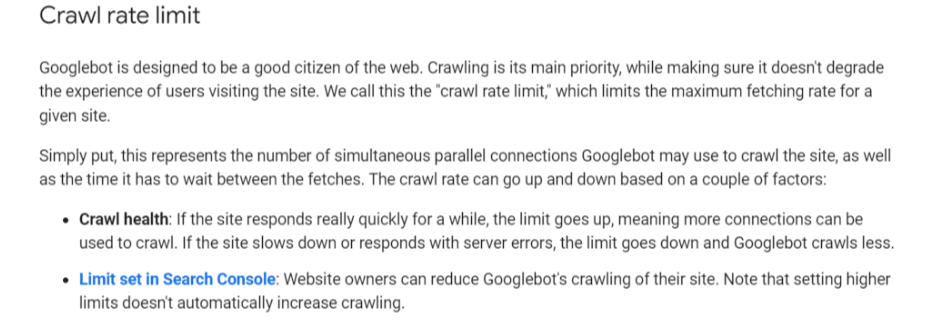

Googlebot, Google’s search engine bot, has a “crawl budget” which restricts the amount of time it can spend on crawling your website.

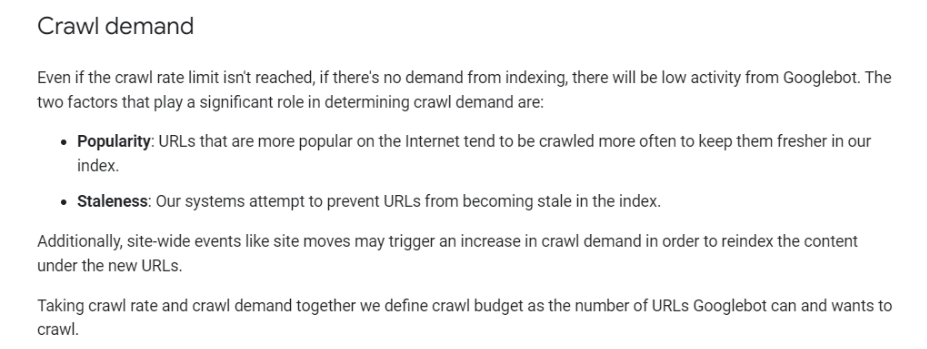

The crawl rate limit is a two-part process. Google explains it as:

And the crawl demand:

To put it simply, the crawl budget is basically how many URLs Googlebot can and wants to crawl.

To ensure Googlebot is allocating its crawl budget for your website properly, you should prioritize crawling the most beneficial pages.

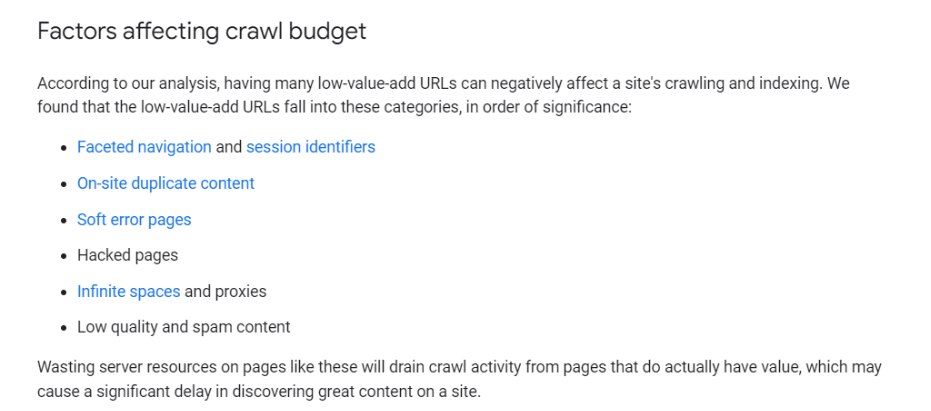

Google has identified certain elements that will negatively impact how a website is both crawled and indexed.

The following are the factors to consider:

Let’s return to the discussion of robots.txt and its importance.

By creating a proper robots.txt page, you can inform search engine bots – particularly Googlebot – to avoid particular pages on your website.

Visualize the possibilities. When you allow search engine bots to exclusively explore your most valuable content, they will solely crawl and index what you specify for them.

According to Google:To ensure your server is not overrun with Google’s crawler and that you don’t expend crawl budget on irrelevant or duplicate pages, be sure to prioritize the important content on your site.

By effectively leveraging your robots.txt file, you can instruct search engine bots to operate strategically and cost-efficiently. This makes the robot’s txt a powerful tool for optimizing your SEO strategy!

Are you curious about the incredible potential of robots.txt?

You should definitely be excited! Now, let’s discuss how to locate and use it for your benefit.

Where to Find your robots.txt file?

If you’re looking for a fast and easy way to view your robots.txt file, here’s the solution!

In fact, this method is applicable to any website. So you can examine other sites’ files to see the strategies they’re using.

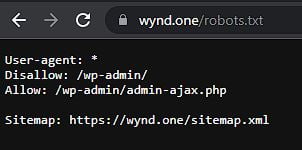

It’s as simple as typing a website’s original URL (for instance, wynd.one or google.com) into your browser search bar and adding “/robots.txt” at the end.

One of three situations will happen:

Find a robots.txt file.

Invest a moment to evaluate the robots.txt file of your website.

If you discover an empty file or experience a “404 Not Found” error, it’s time to take action and make necessary corrections.

If you locate a valid file, it likely contains the default settings that were generated upon website creation.

This method of analyzing other websites’ robots.txt files is one that I appreciate immensely, as it can be incredibly beneficial once you understand the fundamentals and processes behind it.

Now, let’s look at the process of modifying your robots.txt file.

Locating Your Robots.txt File

After verifying whether or not a robots.txt file exists (follow the instructions provided), you will be able to determine your next steps accordingly.

To create a robots.txt file from scratch, you will need to open up a text editor such as Notepad (Windows) or TextEdit (Mac).

To prevent any unexpected code from being inserted, you should only use a plain text editor for this task. Programs such as Microsoft Word could potentially add additional code.

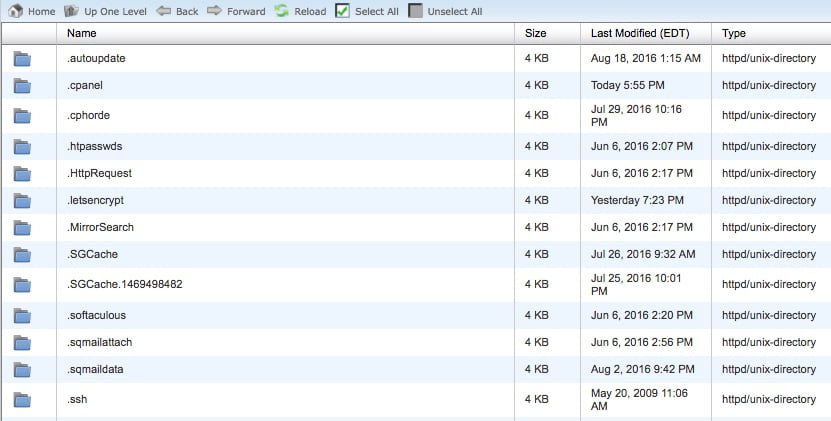

To find the file, you’ll have to look in your website’s root directory.

If you’re new to digging around in source code, it can be a bit tough to find the editable version of your robots.txt file.

You can easily locate your root directory by visiting the hosting account website, entering your login credentials, and navigating to the file management or FTP of your website.

You should expect to observe something similar to this:

Clear out your robots.txt file contents, but make sure to retain the document itself.

Keep in mind that if you are using WordPress, while you can view the robots.txt file at yoursite.com/robots.txt, it will not show up among your other files in the back end of your website.

WordPress generates a virtual robots.txt file if one does not already exist in the root directory, therefore allowing for more efficient website operation and security.

If you ever find yourself in this situation, the best solution is to create a new robots.txt file.

Creation of robots.txt file

To create your new robots.txt file, use only a plain text editor.

Be sure to delete the content of your robots.txt file, not the entire file itself.

To get started, you must familiarize yourself with the syntax used in a robots.txt file.

To ensure you have a comprehensive understanding of the fundamentals of robots.txt, Google provides informative instructions.

I’m going to demonstrate how you can construct a basic robot.txt file and modify it for improved SEO performance.

Let’s begin by setting the user-agent parameter, which will be applicable to all web robots.

Use an asterisk after the user-agent term to execute this task:

Then, type out “Disallow:” followed by nothing.

Because there is nothing after the disallow command, web robots are authorized to crawl your entire website. At present, every aspect of it may be accessed and explored without restriction.

By this point, your robots.txt should appear as follows:

It may appear simple, but these two lines are doing a lot of work.

Linking to your XML sitemap is optional.

You won’t believe it, but this is what a standard robots.txt file appears like.

Now let’s take this small file and quickly transform it into a powerful SEO tool.

Use robots.txt for SEO Optimization

Optimizing your robots.txt file strategically can dramatically enhance the way people interact with and experience your content. With numerous tactics at hand, you should take advantage.

Let’s explore some of the most popular ways to take advantage of it.

Be aware that you should never use robots.txt to prevent search engine crawlers from indexing certain pages.

Maximize your website’s search engine visibility by utilizing the robots.txt file to prevent the crawling of any private data, allowing search engines to focus their resources on the publicly displayed content.

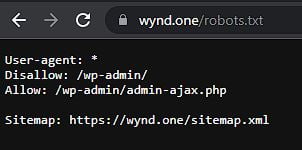

As an example, the robots.txt file for this site (wynd.one) disallows access to its login page (wp-admin).

Given that the page serves solely as a login portal for the backend of the website, it doesn’t make sense to have search engine bots crawl it – this would be an unnecessary waste of time.

If you have WordPress, the same exact disallow line can be used.

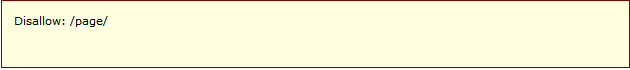

By utilizing the same directive or command, you can stop bots from crawling particular areas of your website. After disallowing, simply enter the part of your URL that is situated after “.com” and ensure it’s placed between two forward slashes.

If you’re looking to block bots from crawling your page, http://yoursite.com/page/, you can use this command:

If you are asking yourself which pages should be excluded from indexation, here are a few scenarios in which to consider it:

Although duplicate content is usually discouraged, there are some cases where it’s necessary and acceptable.

As an example, if you have a page with its printer-friendly version, that counts as duplicate content. You may instruct search engine bots not to crawl that version (generally the printer-friendly one).

Split-testing pages with different designs but identical content can be made easier with this handy tool. Marketers love the thank you page because it signifies a new lead.

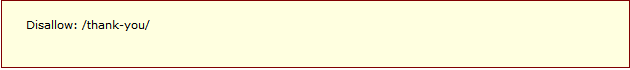

It may appear harmless, but some thank you pages are actually indexed by Google. This means that people can access these pages directly from the search engine without having to complete a lead capture form first – something that should be avoided.

By strategically blocking your thank you pages, you can guarantee that only the most qualified leads have access to them.

If your thank you page is located at https://yoursite.com/thank-you/, then, in order to block it from search engines, simply insert the following line into your robots.txt file:

Every website has its own specific needs, so creating the perfect robots.txt file for your site requires careful thought and consideration.

It’s important to keep in mind two other directives: noindex and nofollow.

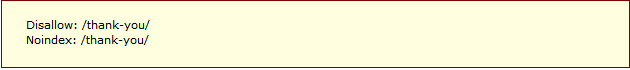

Despite using the disallow directive, it’s not actually blocking your page from being indexed.

While you may theoretically choose to disallow a page from indexing, it could still end up in the search engine results.

Generally, that is not something you would want to happen.

Utilizing the noindex directive in conjunction with the disallow directive will ensure that bots are blocked from crawling and indexing certain pages.

Keep those exclusive thank you pages private with the noindex and disallow directives. This will make sure that your web crawlers don’t index these pages.

That page won’t be visible in the search engine result pages.

Lastly, there is the nofollow directive. It’s essentially like a nofollow link that instructs web robots to not crawl or follow the links on a page.

However, the nofollow directive will be applied differently since it’s not actually part of the robots.txt file.

Despite this, the nofollow directive still commands web robots to take action, using the same principle.

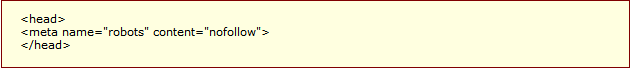

Find the source code of your desired page, and make sure your cursor is situated between the <head> tags.

Insert the following line of code: <meta name=”robots” content=”nofollow”> for it to appear like this:

Ensure that the line of code is placed only between <head> tags and nowhere else.

For those seeking an effective thank you page, this is the solution! Web robots won’t crawl any links containing exclusive content such as lead magnets.

If you wish to incorporate both noindex and nofollow directives, then add this particular piece of code:

<meta name=”robots” content=”noindex,nofollow”>

This will allow web robots to simultaneously receive both directives.

Testing everything out

Last but not least, ensure that your robots.txt file is functioning properly and efficiently by testing it out.

To make sure your website is properly configured for web crawlers, you can take advantage of Google’s free robots.txt tester within their Webmaster tools.

To begin, simply click the “Sign In” button in the top right corner of your Webmasters account.

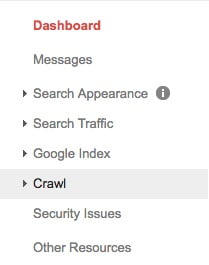

Select your property (i.e., website) and hit the “Crawl” button in the left-hand sidebar to get started.

Click on “Robots.txt Tester.”

If the box contains preexisting code, delete it and exchange it with your updated robots.txt file. Then proceed to verify its accuracy by clicking “Test” on the bottom right side of the screen.

If the “Test” text changes to “Allowed,” your robots.txt file is valid.

For those who are curious to learn more, here is detailed information about the tool and its features.

Once you are finished, upload your robots.txt file to the root directory of your website (or save it there if one was already present). Now that you have a potent tool at your disposal, expect improvements in terms of search visibility.

Conclusion

There are many little-known SEO “hacks” that can give you a real advantage. One such hack is to set up your robots.txt file the right way. By doing this, you’re not only enhancing your own SEO but also helping out your visitors.

For search engine bots to accurately display your content in the SERPs, they must be provided with a crawl budget. This is easily achievable through setting up your robots.txt file – an effortless task that only needs occasional small modifications as required.

If you’re just starting your first website, using robots.txt can make a noticeable impact on its performance. If you’ve never tried it before I highly suggest that you do it as soon as possible!